How to fit a manifold?

This is a quick introduction of manifold fitting. A PDF version is also available. More details can be found in:

- Fefferman, C., Ivanov, S., Kurylev, Y., Lassas, M., & Narayanan, H. (2018, July). Fitting a putative manifold to noisy data. In Conference On Learning Theory (pp. 688-720). PMLR.

- Yao, Z., & Xia, Y. (2019). Manifold fitting under unbounded noise. arXiv preprint arXiv:1909.10228.

- Yao, Z., Su, J., Li, B., & Yau, S. T. (2023). Manifold fitting. arXiv preprint arXiv:2304.07680.

Method

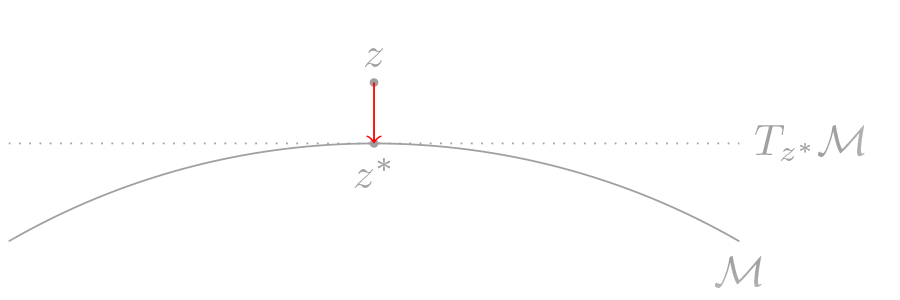

Let \(z\) be the point of interest, which is close to \(\mathcal{M}\), and \(z^* = \arg\min_{z^\prime\in\mathcal{M}} d(z^\prime,z)\) be the projection of \(z\) on \(\mathcal{M}\). Intuitively, the estimation of manifold can be viewed as ‘‘pushing’’ \(z\) to \(z^*\).

This pushing process involves two key components: direction and distance. The direction should be perpendicular to \(T_{z*}\mathcal{M}\), which can be deduced from the local ‘‘covariance’’ structure, while the distance \(d(z,\mathcal{M})\) might be estimated using the local average. The following subsections will introduce some intuitive concepts related to this process. For more details, please refer to the papers mentioned previously.

Estimate Direction from Local ‘‘Covariance’’

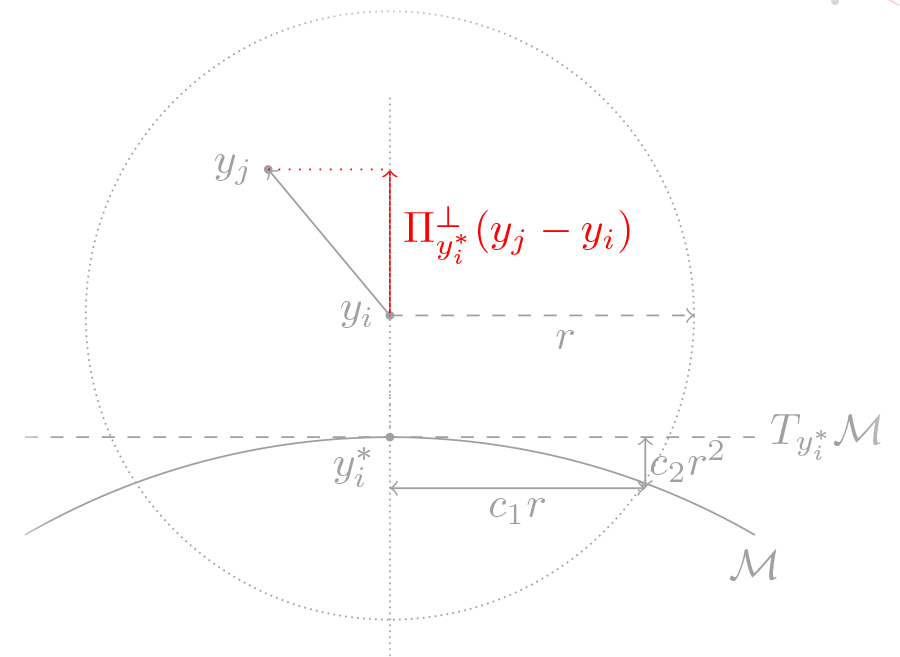

For each point \(y_i\in\mathcal{Y}_N\), let \(y_i^*\) be its projection on \(\mathcal{M}\). Assume the normal space of \(\mathcal{M}\) at \(y_i^*\) has orthonormal basis \(\{u_1,\dots,u_{D-d}\}\), we use

\[\Pi_{y_i^*}^\perp = \bigl(u_1,\dots,u_{D-d}\bigl) \bigl(u_1,\dots,u_{D-d}\bigl)^\top = \sum_{k=1}^{D-d} u_k u_k^\top\]to represent the projection matrix onto this space. This projection matrix can be estimated from the local variation centered at \(y_i\), and the estimator of \(\Pi_{y_i^*}^\perp\) is denoted as \(\widehat{\Pi}_{y_i}^\perp\).

Let \(\mathcal{B}_D(y_i,r)\) be the \(D\)-dimensional Euclidean ball centered at \(y_i\) with radius \(r\). If \(r\) is ‘‘large’’ enough, such that \(\|y_i - y_i^*\| \leq \|\xi_i\|\leq c_0r\), the area of \(\mathcal{B}_D(y_i,r)\cap \mathcal{M}\) roughly has radius \(c_1 r\), and the variation of \(\mathcal{M}\) along the normal direction is less than \(c_2 r^2\) due to the reach. Then, since the distribution of \(X\) is smooth, the variation of \(Y-y_i\) along direction:

- \(\leftrightarrow\): is roughly in the order of \((c_1 r)^2 + \sigma^2\);

- \(\updownarrow\): is roughly bounded above by the order of \((c_2 r^2)^2 + \sigma^2\).

Thus, we can define

\[\widehat\Sigma_{r,i} = \frac{\sum_{j=1}^N (y_j-y_i)(y_j-y_i)^\top \mathbb{I}(\|y_j-y_i\|\leq r)}{\sum_{j=1}^n \mathbb{I}(\|y_j-y_i\|\leq r)}.\]Then perform SVD on \(\widehat\Sigma_{r,i}\) to obtain \(\{\lambda_1<\cdots<\lambda_D\}\) and \(\{v_1,\dots,v_D\}\), and estimate \(\Pi_{y_i^*}^\perp\) with \(\widehat{\Pi}_{y_i}^\perp = \bigl(v_1,\dots,v_{D-d}\bigl) \bigl(v_1,\dots,v_{D-d}\bigl)^\top = \sum_{k=1}^{D-d} v_k v_k^\top,\) whose estimation error can be bounded.

Smoothing System

To make the overall estimation smooth enough, the weight function for \(y_i\) with respect to \(z\) is defined as

\[\widetilde{\alpha}_i(z)=\left(1 - \frac{\|z - y_i\|^2}{r^{\prime 2}}\right)^{\beta} \mathbb{I}(\|z-y_i\|\leq r^\prime), \quad \alpha_i(z) = \frac{\widetilde{\alpha}_i(z)}{\sum_{i=1}^n\widetilde{\alpha}_i(z)},\]where \(\beta\geq 2\) is a parameter corresponding to the smoothness. Then, for \(z\), a smooth reference point can be given by \(\widehat{\mu}_z = \sum_{i=1}^N \alpha_i(z) y_i\), and a smooth projection matrix is calculated as

\[\Psi_z = \mathbb{P}_{D-d}\left(\sum_{i=1}^N\alpha_i(z)\widehat\Pi_{y_i}^\perp\right),\]where \(\mathbb{P}_{k} (A)\) stands for the projection of matrix \(A\) onto the span space corresponding to its largest \(k\) eigenvalues.

The Manifold Estimator

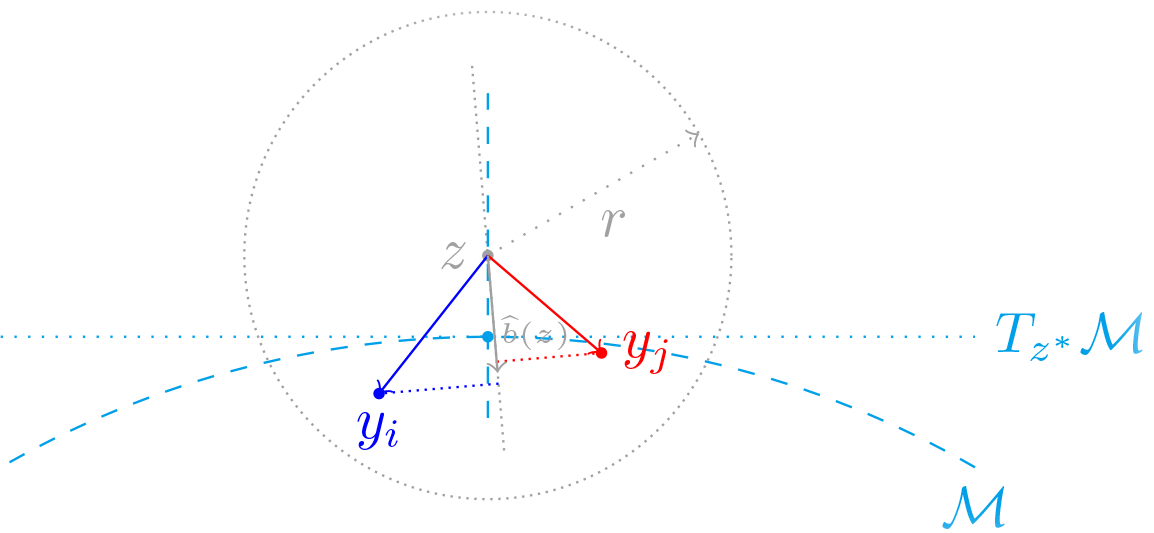

The vector from \(z^*\) to \(z\) can be estimated with the bias vector

\[\widehat{b}(z) = \sum_{i=1}^n \alpha_i(z)\Psi_z (z - y_i) = \Psi_z (z - \widehat{\mu}_z),\]which can be shown

- \(\|\widehat{b}(z)\|\) close to \(\|z-z^*\|\);

- Jacobian matrix of \(\widehat{b}(z)\) is close to \(\Phi_z\), i.e. \(\|J_b(z) - \Psi_z\| \leq C\sigma/r^\prime + o_p(1)\);

- Hessian matrix of \(\widehat{b}(z)\) is lower bounded.

Finally, the manifold estimator is given by

\[\widehat{\mathcal{M}} = \{z\in\mathbb{R}^D: d(z,\mathcal{M})<cr^\prime, \widehat{b}(z) = 0\}.\]Under all the error bounds and all the smoothness, for any \(z^\prime\in\widehat{\mathcal{M}}\), with high probability,

- \(z^\prime\) is close to \(\mathcal{M}\);

- in its neighborhood, \(\widehat{b}(z)\) is rank \(D-d\).

Hence, with high probability, \(\widehat{\mathcal{M}}\) is a \(d\)-dimension manifold, close to \(\mathcal{M}\), and its reach can be bounded via the Hessian of \(\widehat{b}(z)\).

Enjoy Reading This Article?

Here are some more articles you might like to read next: